On 10 November 2025, Open Pharma brought together 50 minds from pharma, publishing, academia, patient organizations and more for Open Pharma Day: truth, transparency and trust to combat misinformation in medical research communication. In this post, we summarize Open Pharma Day through six big ideas from the faculty and look to the future through insights shared by the delegates.

Provide people with good questions

From Artificial intelligence (AI) and misinformation in medical research communication: opportunities and risks with Tracey Brown (Director of Sense about Science)

In an era of AI-driven content and rampant misinformation, the most powerful tool for finding the truth is knowing what questions to ask.

Equipping people – from patients to healthcare professionals (HCPs) – with the ability to ask good, thoughtful, evidence-based questions helps them navigate the plethora of available health research information, weed-out potential misinformation and make informed decisions.

Looking to the future

The future of trustworthy communication lies in empowering curiosity in everyone. By designing systems, tools and conversations that foster critical questioning, we can counter misinformation and build resilience to it. This could involve:

- working with educators to teach even the youngest students about the scientific process and how to question ‘facts’

- developing a better understanding of how to critically evaluate AI-generated outputs

- educating on how to develop counter arguments and build confidence in responding to misinformation

- communicating information about what risk means in the context of biomedical research with patients and the public.

People have a hunger for the truth

From Dissemination of reliable medical information in an era of misinformation with Tracey Brown, Brian Southwell (Distinguished Fellow at RTI International), Helen Pearson (Senior Editor at Nature) and Richard Smith (Chair of Open Pharma)

One of the reasons misinformation can spread quickly is because it is novel and contentious – characteristics that are favoured by social media algorithms. Yet, despite the charms of misinformation, people crave clarity and credible answers.

The challenge is that high-quality, evidence-based communication takes time and resources. When reliable information is slow to surface, misleading narratives fill the gap. To meet this hunger, we need collaboration across science, media and healthcare to ensure trustworthy information is not only accurate but also understandable and discoverable.

Looking to the future

By investing in transparency and speed without sacrificing rigour, we can close the gap between curiosity and credibility to rebuild trust in medical research. To do that, we need:

- dialogue – people used to hear from diverse perspectives, but it’s easy to curate your audience on social media to feed into your own biases; seeing all sides of an issue can inform decision-making

- easy access to validated information

- methods to ensure AI tools are regulated and use trust marked information

- encounters with possible misinformation to be seen as an opportunity to learn about community values

- published research to be more understandable through extenders and plain language

- funding of independent critical voices (e.g. patient groups).

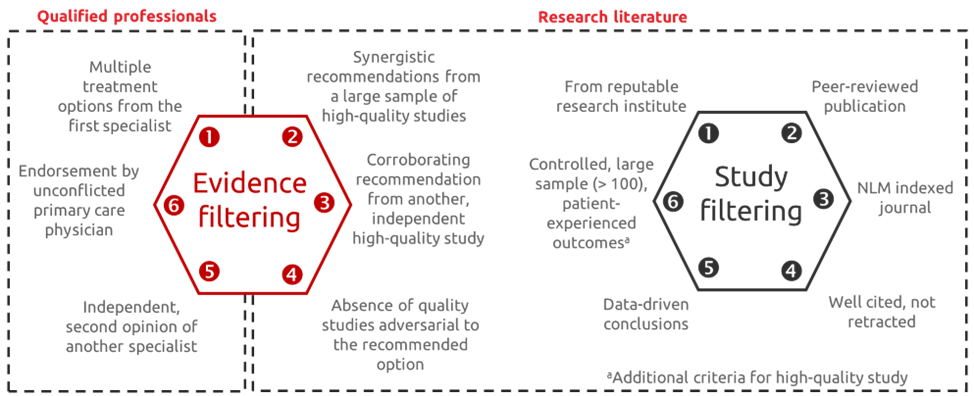

No more banality

From Leadership and innovation to promote equitable access to reliable medical information with Andrew Balas (Professor at Augusta University)

Health decisions are complex, relying on a diverse – and sometimes contradictory – evidence base. In his presentation, Andrew Balas urged the scientific community to move beyond simplistic, one-dimensional evidence assessment towards a hexagonal assessment model. This approach involves evaluating evidence and studies from multiple perspectives, drawing on insights from qualified professionals and published literature to support well-rounded healthcare decision-making.

Looking to the future

Multidimensional thinking requires new communication channels and trust markers. This could be achieved by:

- building a trusted, open-source repository to make all research content discoverable

- creating a mechanism for pharma experts to enter into scientific discourse in the public domain

- advocating for statutory clinical trial registration so transparent reporting is officially validated

- being open about uncertainty and what isn’t known, as well as what is

- engaging stakeholders on a human level beyond only digital interactions.

Let’s have patient engagement!

From The patient perspective: the challenges of accessing reliable medical information with Ellie Challis (Trustee, Promotion and Awareness at PTEN UK & Ireland Patient Group), Durhane Wong-Rieger (President and CEO of the Canadian Organization for Rare Disorders) and Chris Winchester (CEO of Oxford PharmaGenesis)

Patients often turn to social media for health advice, where misinformation is rife. To combat this, we must empower patients and advocates to spot inaccuracies, report misleading content and amplify trustworthy sources, including HCPs. HCPs are trusted channels through which reliable information reaches patients, and this role should be leveraged to help patients make evidence-based decisions about their health. Importantly, the misconception that patients are passive recipients of information needs to be reframed. When treated as active partners, patients can have a vital role in shaping medical evidence for the benefit of all.

Looking to the future

The future of patient engagement means creating two-way communication ecosystems in which patients, advocates, HCPs, researchers and funders collaborate to create and share accurate information. By integrating patient voices into strategy and leveraging digital platforms responsibly, we can build a culture of trust and resilience that is built on respect rather than tokenism. To achieve this, we need to:

- let go of educational elitism – everyone will benefit from easy-to-understand information

- involve patients in above-brand discussions

- listen with respect

- build relationships to build trust

- be honest and own up to mistakes

- engage with health journalists

- ask patients what they want, not what they can do for us.

In the future, we won’t trust anything written

From The role of publishers in combatting misinformation with Deborah Dixon (Global Editorial Director, Medicine and Science Journals of Oxford University Press), Nathalie Le Bot (Editorial Director for Health and Clinical Sciences at Nature Communications), Jo Wixon (Director of External Analysis at Wiley) and Richard Smith

As AI reshapes scholarly publishing, trust can no longer be assumed; it must be earned and demonstrated.

Publishers have a critical role to play in safeguarding research integrity by making the scientific record open, verifiable and resistant to organized misinformation. AI can help, but only as a companion to human oversight, not a replacement.

Looking to the future

The future of trust in medical publishing will hinge on robust trust markers, clear provenance, reproducible data and transparent editorial processes. Publishers must:

- work together

- create infrastructures that enable researchers to share everything about their work openly

- combine technology with human judgement to build a publishing ecosystem in which reliability is visible, collaboration is the norm and misinformation has nowhere to hide.

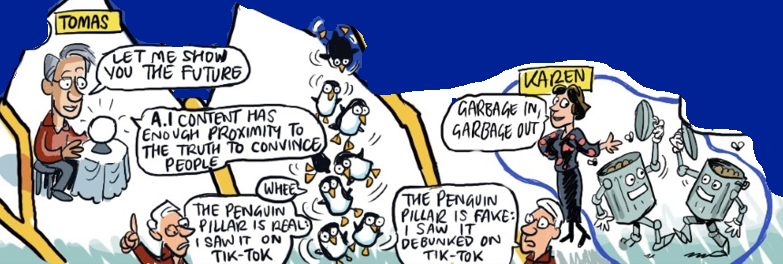

Garbage in, garbage out

From Discoverability by design to make pharma knowledge work in an AI-first world with Tomas Rees (Director of Innovation at Oxford PharmaGenesis) and Karin Slater (Senior Data Scientist at Oxford PharmaGenesis)

AI outputs are everywhere, but they are only as good as the data they are based on. What if there was a way to create a trusted, reliable, AI-friendly source of validated healthcare knowledge? Decentralized Knowledge Graphs (DKGs) offer a powerful solution that integrates blockchain, retrieval-augmented generation and FAIR (findable, accessible, interoperable and reusable) data principles to ensure integrity and transparency of AI-generated research assessments.

Looking to the future

The future of trustworthy AI use in healthcare depends on credible, connected and computable knowledge ecosystems. By adopting DKGs, we can enable personalized patient education, automated literature reviews, clinical decision support and more, all while reducing the risk of misinformation.

Become a trust builder

Don’t just read. Act!

All of us can combat misinformation through actions, both big and small. Here’s what you can do today.

- Ask one good question about a health claim you see online (and share the answer!).

- Amplify trustworthy information by critically reviewing the references you use.

- Meaningfully engage with patients and advocates on your next project.

- Support transparency by advocating for plain language summaries or open peer review at your organization.

- Audit one piece of content you’ve produced for FAIR principles (findable, accessible, interoperable and reusable).

- Share this post with your network and start a conversation about trust.

Let us know how you’ve become a trust builder by joining the conversation on LinkedIn.

Thanks to David Lewis for capturing these big ideas in his live illustration of Open Pharma Day.